Keeping ‘AI in check’: Another tech giant apologizes for violating privacy

Smart home speakers and voice assistants are all the rage, but so are fears now that strangers could be listening to your private conversations.

Apple Inc. became the latest tech giant in the past week to apologize for allowing outside contractors to listen to customer recordings from voice assistant Siri in order to improve the artificial intelligence service.

This follows Amazon.com Inc’s announcement last month that users can disable human review of Alexa recordings, while Alphabet Inc paused all reviews of recordings from its Google assistant service.

Amid the big privacy concerns, tech companies are also racing to develop the latest AI breakthrough like home speakers that can detect if you’re having a heart attack.

But how do people feel about the creation of technological advances that can change their lives?

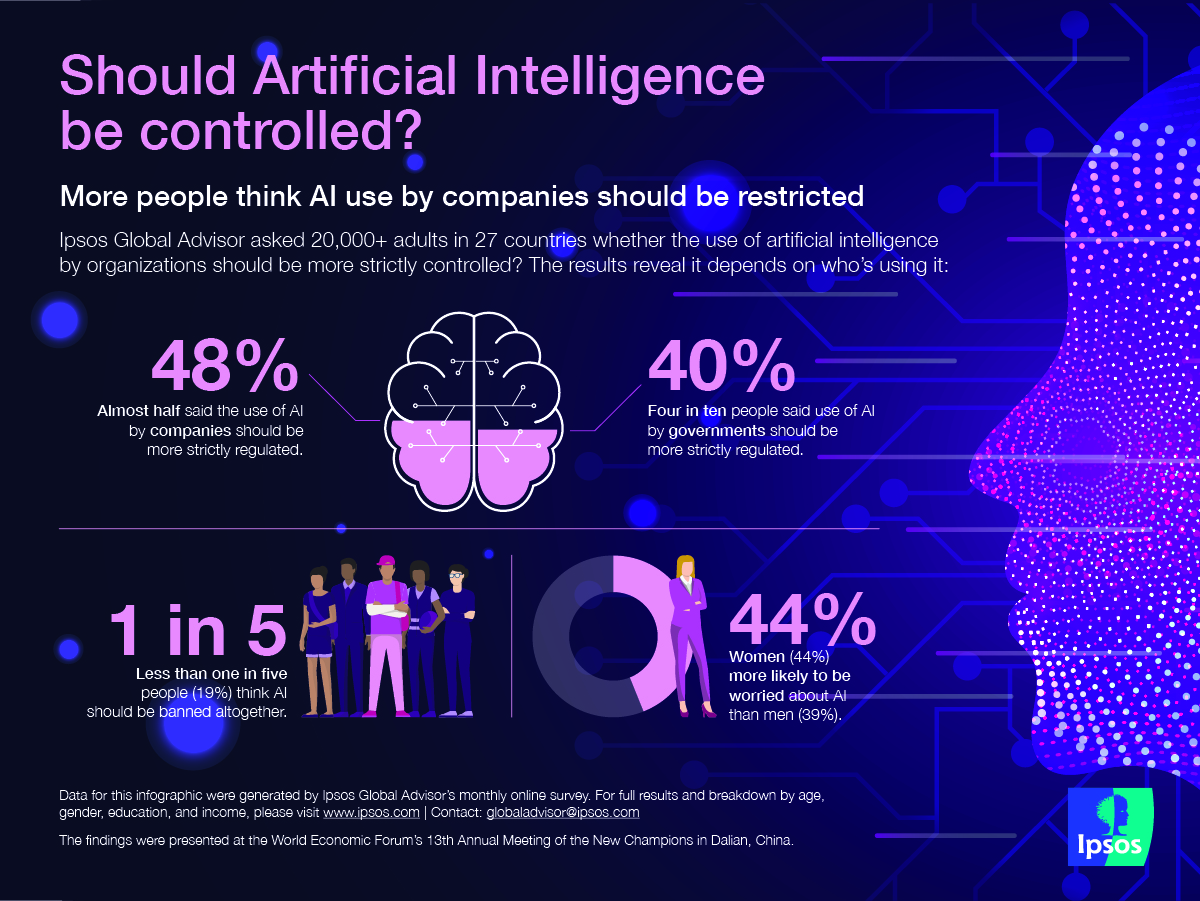

A recent Global Advisor survey found that nearly half (48%) of more than 20,000 people surveyed in 27 countries think AI use by private companies should be more strictly regulated.

That compares to four in ten people (40%) that said government use of AI should be more be controlled.

Overall, more people are worried about the use of AI (41%) than those that are not (27%).

George Tilesch, Chief Strategy & Innovation Officer at Global Affairs, said people are concerned that AI regulation is mostly nonexistent right now - but regulation is inevitable.

“Part of the issue is that AI pack leader companies are sensitive about admitting that AI-powered products that are already commercialized are very much a work in progress, and in many cases need an army of human workers and users to be engaged to train machines or keep AI in check,” said Tilesch, who is writing a book about the impact of AI on society.

Transparency key to development

He adds that upfront transparency about human reviews of data and enforcing a strict opt-in agreement for users could work to help the companies develop AI without invading consumers’ privacy.

“I think tech leaders have a big responsibility to rebalance the AI public conversation that is very much hype-and-bust now,” Tilesch said.

“Companies should be pre-emptively open about both AI shortcomings and the necessary ingredients of AI development that much of the tech elite is aware of, but the message seldom reaches the public.”

In a separate Global Advisor survey done across 26 countries late last year, almost two-thirds (64%) of nearly 19,000 respondents said they would be more comfortable sharing their data if they were promised that the information would not be shared with third parties.

As part of its apology, Apple announced that only its own employees will be allowed to review Siri recordings to improve the service when permission is granted by consumers.

Tilesch points out that some companies are calling for regulations themselves and committing to self-regulate in order to prevent a “downward spiral” of how we view AI.

“Regulation is inevitable. The big question is whether it will be up to the task,” said Tilesch. “AI tech pack leaders need to realize the need to formulate consensus on directions and restrictions – and that is not going to be easy.”