Reputation resilience in the age of ‘fake news’

Misinformation, now commonly known as ‘fake news’, is as old as human communication, as is the difficulty in combating it. As Jonathan Swift put it, ‘falsehood flies, and the truth comes limping after it’. It was true in 1710, and it is even more true today.

The internet has – just as the printing press, radio, and television did before it – made the transmission of misinformation faster and easier than ever before, and in the past few months the push to tackle online misinformation has been imbued with a new urgency.

Deepfakes

The past month has seen both a video of US House Speaker Nancy Pelosi, edited to make her appear as if she was stuttering and slurring her language, and a faked video of Facebook CEO Mark Zuckerberg boasting about his power over the billions of people who use Facebook’s products, go viral. The former a malicious attempt at a political smear, the latter an apparently anarchical artistic critique of Facebook, neither was taken down by the internet giant’s platforms.

But the biggest demonstration of the power of what are being called ‘deepfakes’ came from Samsung’s AI Lab, which has developed a machine learning algorithm which can animate a still image to appear like natural facial movement. The technology to create convincing speech in a real person’s voice already exists, provided enough sample speech exists for the algorithm to be trained on.

The implications of these developments are clear – it is now technically possible to create a fake video of any prominent public figure delivering any kind of speech you want, post it to social media, and do incredible reputation damage as it racks up views.

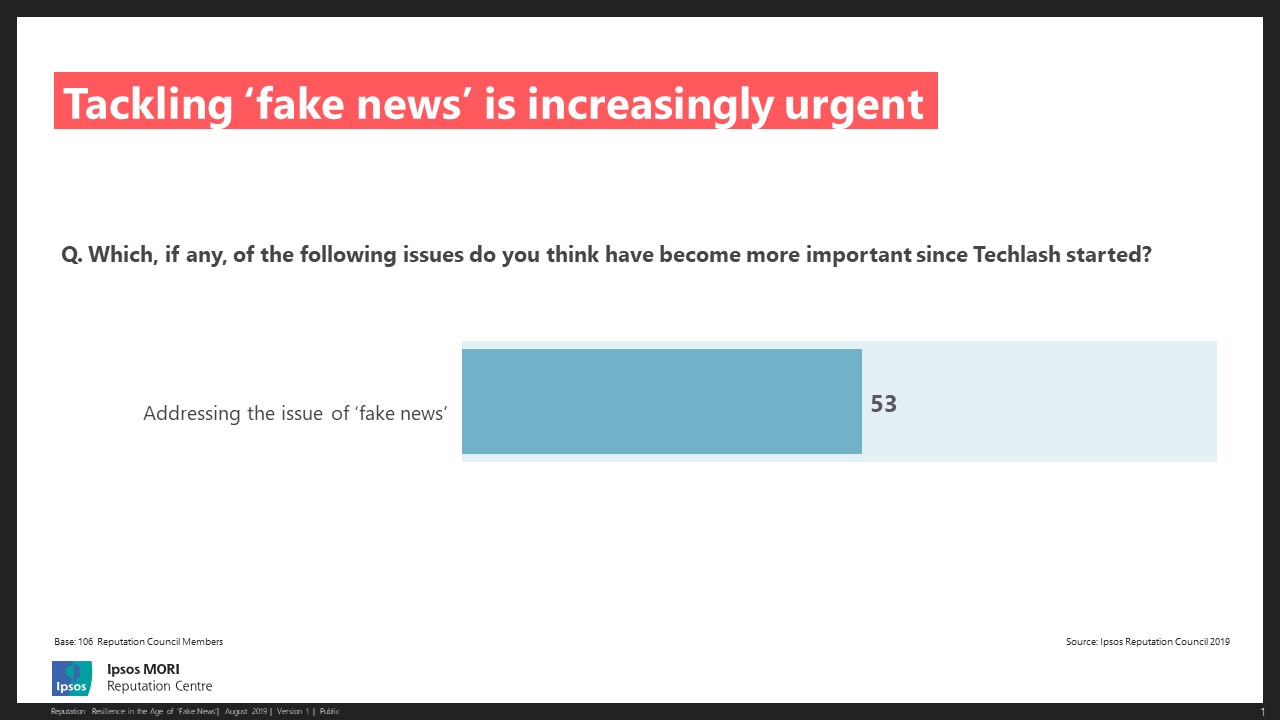

53% of senior corporate comms folk agree that addressing the issue of fake news has become more important since the beginning of Techlash.

Deepfakes represent a sinister escalation in the spread of online misinformation, at a time of growing concern about fake news. More than half of the senior corporate communicators interviewed for the Ipsos Corporate Reputation annual Council report, said that addressing the issue of fake news had become more important in recent years.

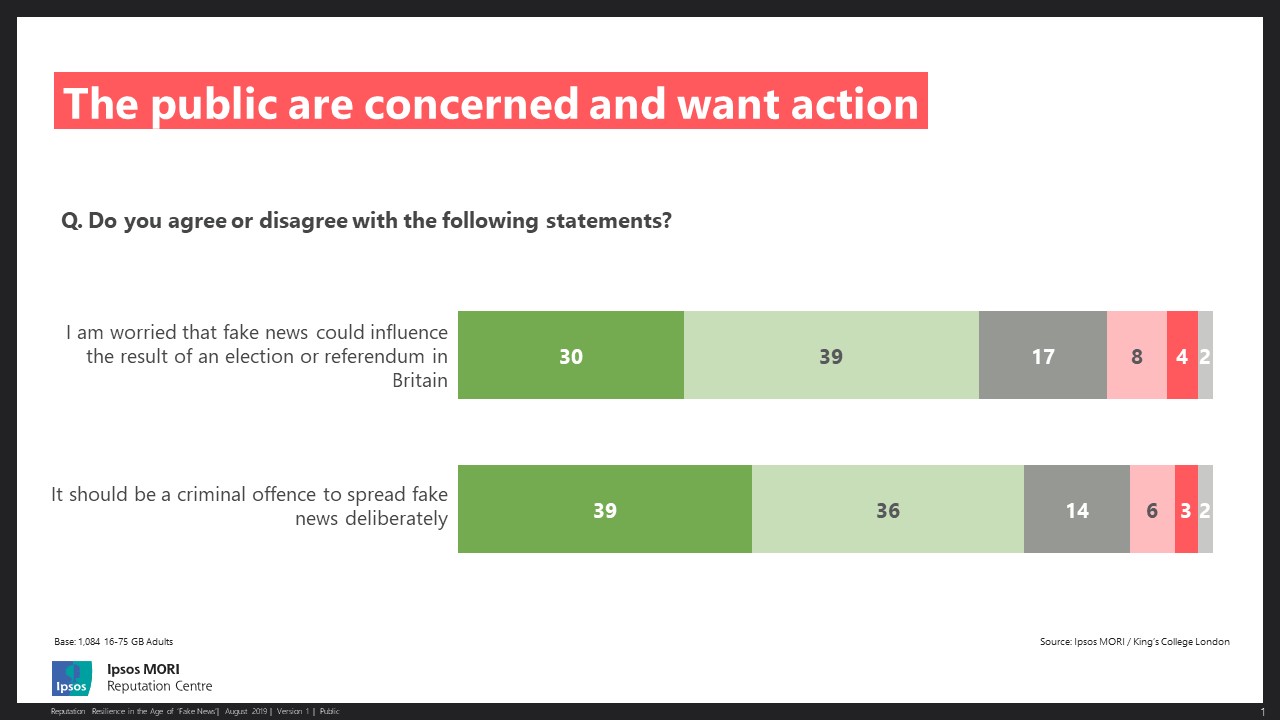

Around seven-in-ten members of the UK public are worried that fake news could influence the result of an election or referendum in the UK. Three quarters think it should be made illegal to spread fake news deliberately.

Causes for concern about misinformation are multiplying. An election campaign could be torpedoed by the spread of a deepfaked video of its candidate appearing to accept a bribe. The stock price of practically any large, listed company could be sent into a spiral by a deepfake of its CEO admitting to accounting fraud. Metro Bank’s accounting error sent its share price plummeting by 60% from mid-January to the end of February, following which false rumours about its safety deposit boxes sparked enormous queues of customers looking to withdraw. Metro Bank’s share price has continued to decline, and now sits 87% below its 2018 high.

Fighting Back

Facebook’s expressed policy towards deepfakes is to allow them to be posted as a form of expression, but to not distribute them through newsfeeds and to attach links to fact checkers to combat misinformation. While an admirable attempt to balance freedom of expression with tackling misinformation, this does nothing to stymie the organic reach of fake news through normal users.

Yet outright banning the spread of fake news seems impractical. I feel for the prosecutor required to demonstrate the mens rea of activists sharing viral fake news online.

Regulatory responses are in the pipeline, as are technological solutions: a team of Adobe and UC Berkeley researchers have created a set of machine learning algorithms which can detect facial manipulation done with Photoshop. With a 99% success rate, it seems that deepfakes will eventually meet their technological match.

But in the meantime, what can businesses do to mitigate the risk fake news poses?

Building Resilience

No business can prevent malicious actors from producing fake news attacking it – or claiming to speak on its behalf – but the reputation damage fake news can do is not a product of misinformation itself, but the reaction the public and stakeholders have to it.

There are two main ways in which businesses can mitigate the impact of fake news. The first is reactively – by rapidly denouncing misinformation and getting the truth out. Yet as quickly as you may be able to respond to fake news, you will always be playing catch-up.

The key to rebutting fake news and protecting reputations is creating time for rebuttal to be effective. Here, the key issue is reputation resilience: would your consumers and stakeholders believe fake news about you, or give you the benefit of the doubt long enough to set the record straight?

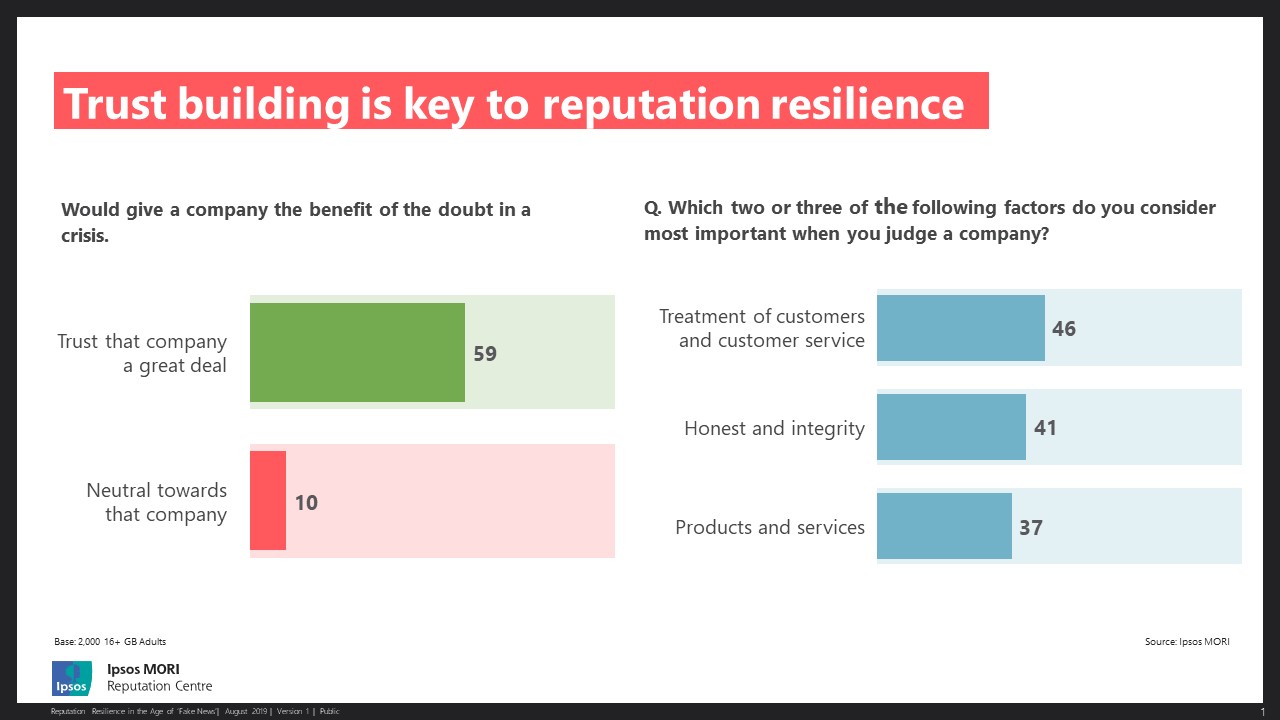

Reputation resilience in the face of crisis is a matter of trust. Most of those who trust a company a great deal say they would give it the benefit of the doubt in a crisis. Among those who are neutral, just a tenth would.

Proactively building trust over time increases reputation resilience, creating the time and space needed for reactive comms to be effective. When judging a company, the public are most likely to take its treatment of customers, honesty and integrity, and its products and services into account.

Think:

• Are our products and services high quality?

• Do we follow through on commitments?

• Do we treat our customers fairly?

Show that you are performing well on these, and you’ll build trust.

As fake news becomes more insidious, sophisticated, and viral, it is becoming very easy to see it as a challenge without a solution, but this couldn’t be further from the truth. Build trust, build resilience, and you’ll get the truth out as fast as falsehood can fly.