Navigating the AI Transition

Key Findings

|

The UK government's newly released AI Opportunities Action Plan lays out a bold and comprehensive vision for being a global leader in artificial intelligence. From investing in critical AI infrastructure and skills, to driving adoption across the public and private sectors, to nurturing homegrown AI champions - the plan sets out how the UK can capitalise on the transformative potential of AI.

Our research shows there is one essential factor that must underpin all these efforts if the UK is to succeed in its AI ambitions: trust. For AI systems to be widely embraced and adopted by citizens, businesses and government services alike, people need to have confidence that the technology is safe, secure, well-governed and aligned with societal values and priorities.

Knowledge and use of AI

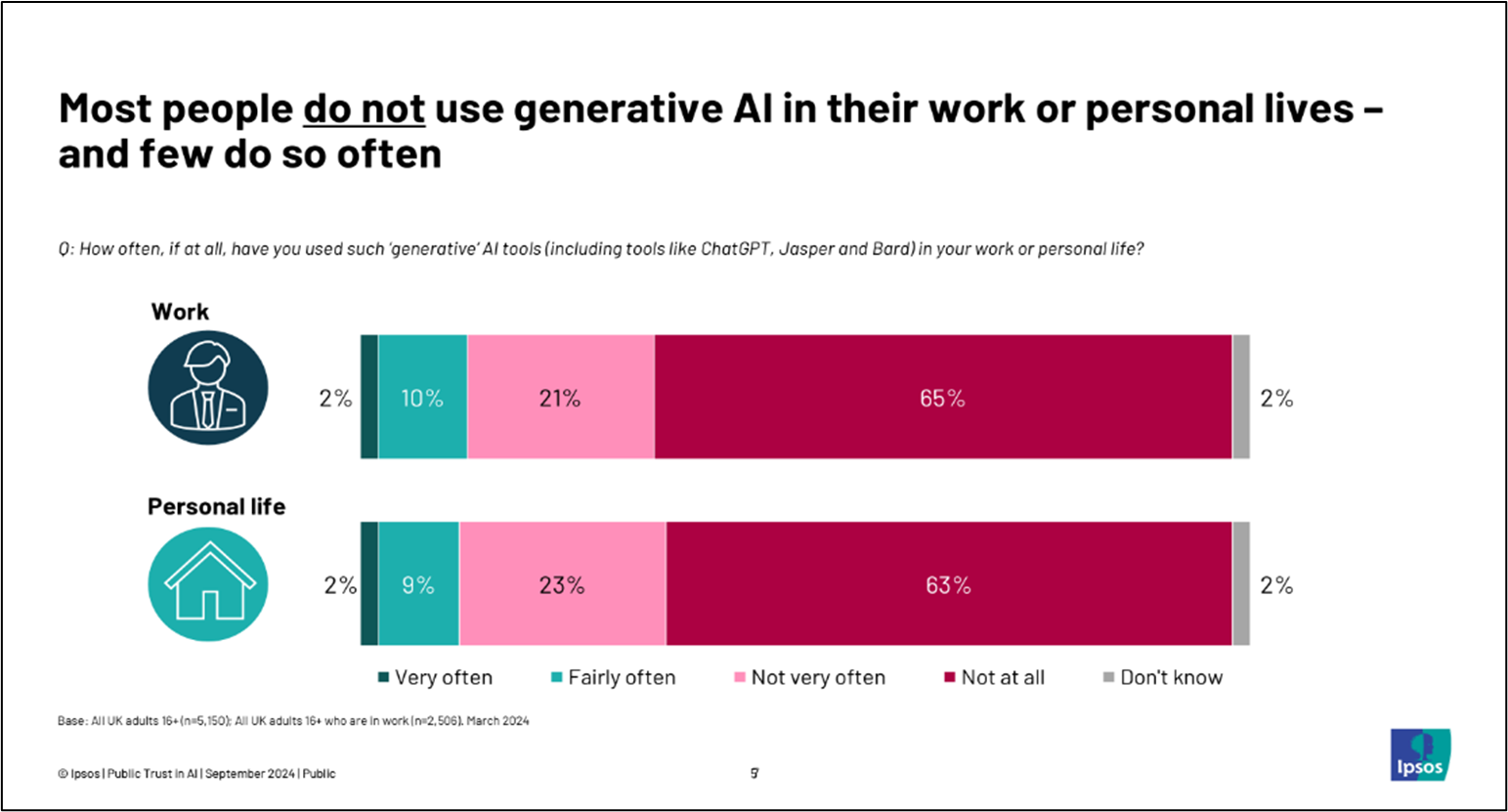

Despite the media and policy focus on AI and growing public awareness, understanding and proactive use of generative AI remains low. Those who say they know more include men, younger people, those in-work, graduates, and Londoners.

Those who know more about AI tend to engage more themselves with AI in their work and personal lives. They also have more trust in the technology and feel more comfortable with different use cases. This suggests a potential divide emerging between those who are more knowledgeable and confident, and those with lower awareness who risk being left behind as AI advances. Targeted education and engagement is needed to improve AI literacy and access.

When discussing AI, people are often negative and share discomfort and suspicion. They say they are worried about AI taking away our shared humanity and sense of control. But as we find so often, in practice public views of new technologies are nuanced and depend on the detailed context of how they are used.

Perceptions of AI in practice

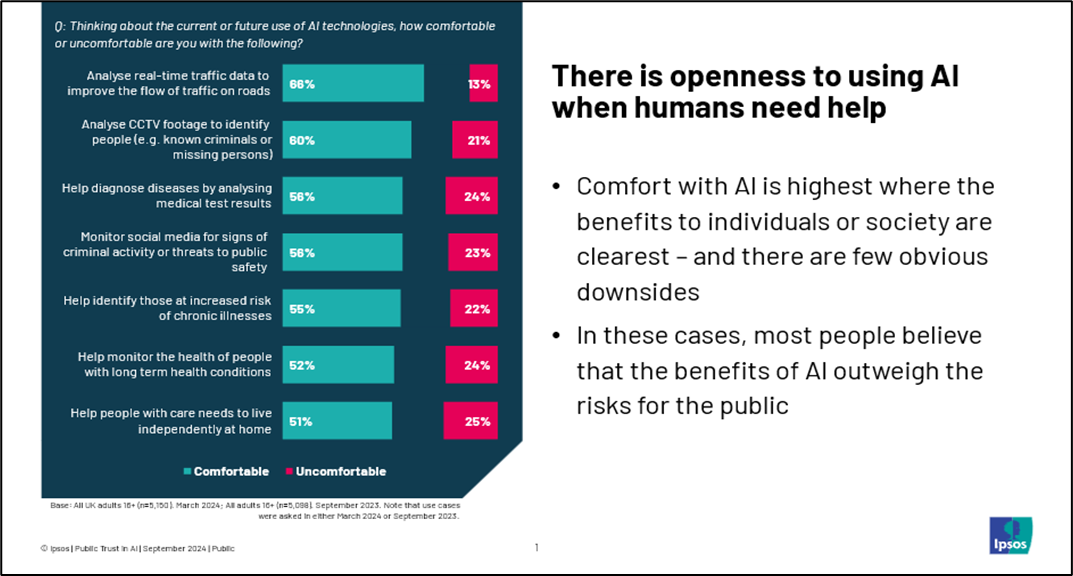

People are open to AI assisting humans but uncomfortable with it replacing human judgement. Our research highlights the desire among the public and businesses for positive, real-world examples of AI being used safely and responsibly to improve work and life, from productivity to health outcomes to engaging with public services.

For example, most people in the UK are comfortable with using AI to improve traffic flow, to identify known criminals or missing persons, and in various ways in healthcare, such as helping to diagnose diseases, identify those at increased risk from chronic illnesses, and monitor those with long-term health conditions.

People are most uncomfortable where AI could be used to mislead by creating ‘fake’ content featuring famous people, or filtering or personalising the content that people see. They are also uncomfortable with AI making important decisions that significantly impact people's lives without human oversight, such as assessing welfare benefits or marking exams.

This means that when it comes to public services, there is openness to specific applications that could enhance delivery, though less support for automated decision-making or resource allocation.

Our research with businesses shows they are looking to the government, training providers, universities, schools and other businesses to improve understanding of AI's benefits and limitations across the UK, while leading by example in setting a high bar for trustworthy AI development and use in the public sector.

Confidence in AI skills

While most people are not yet confident in using AI, those with digital skills generally feel capable of engaging with AI systems. However, there is a critical gap in skills related to understanding and interpreting AI, particularly in areas of safety and privacy, risks and threats, and accuracy and reliability. These skills are crucial for building trust and effective AI implementation.

The workforce will increasingly need to apply AI in various roles, from AI specialists to implementers like researchers and policymakers. Across all levels, key skills will include judging accuracy and reliability, understanding risks and threats, and maintaining information safety and privacy. The UK must cultivate these skills to support innovation, reform and productivity gains -the current focus is on tertiary education and specialist skills.

AI and the future

Our polling has found that the pace of AI regulation is viewed as too slow by most. The UK needs to establish a robust governance framework drawing on global best practices, with public trust and safety as central objectives. Despite doubts about international cooperation on AI, there is a desire for the UK to take a leading role in addressing these issues. Progress on AI regulation and a clear commitment to public concerns are essential to boost trust and acceptance.

More broadly, ongoing public and business engagement to understand evolving attitudes is crucial. Policymakers, regulators and businesses need to collaborate to improve AI literacy, actively involve the public in shaping governance, address the impact on workforce inequality, and support responsible innovation. While the infrastructure and technical capabilities are crucial for the AI transition, public and business trust and confidence are essential for the widespread adoption of AI, and to ensure the UK makes the most of the many opportunities this transition will bring.