People are worried about the misuse of AI, but they trust it more than humans

The Ipsos Consumer Tracker asks Americans questions about culture, the economy and the forces that shape our lives. Here's one thing we learned this week.

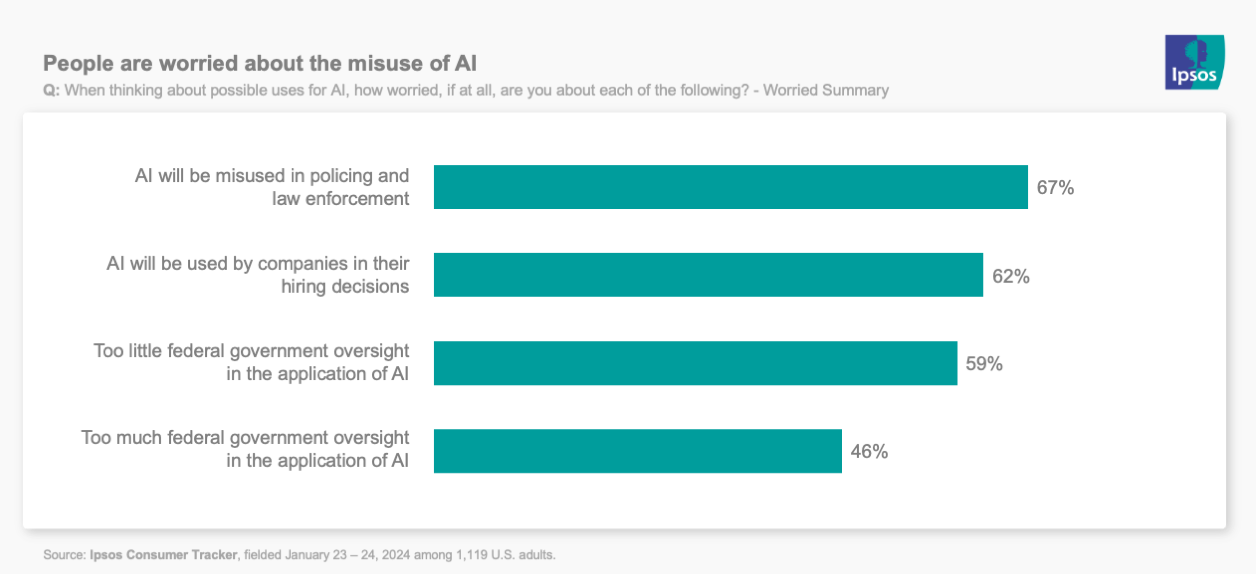

People are worried about the misuse of AI

Why we asked: The AI worry question is part of our ongoing exploration of the tension between the wonder at the possibilities AI will open up vs. the worry of the potential costs of that those technologies will bring.

What we found: It’s been about six months since we asked this particular battery (and we also added a couple of items this time.) And we find that worry across most dimensions is holding steady. About three in four of us are concerned that AI will erode data privacy, create content indistinguishable from that created by humans, make it less likely you’ll get to talk to a human customer service agent, and be used to impersonate people in order to gain access to privileged information. Arguably, all of these things are already happening. Nothing in the news in the last six months has shifted our opinions, including regulation efforts here and in the European Union, as well as promises from the tech industry.

In new items, about two in three are worried AI will be misused in policing and law enforcement, or in corporate hiring decisions. (There have been instances of this happening already, too.) Six in ten think there will be too little federal government oversight of AI. And just under half (46%) think that there will be too much federal oversight.

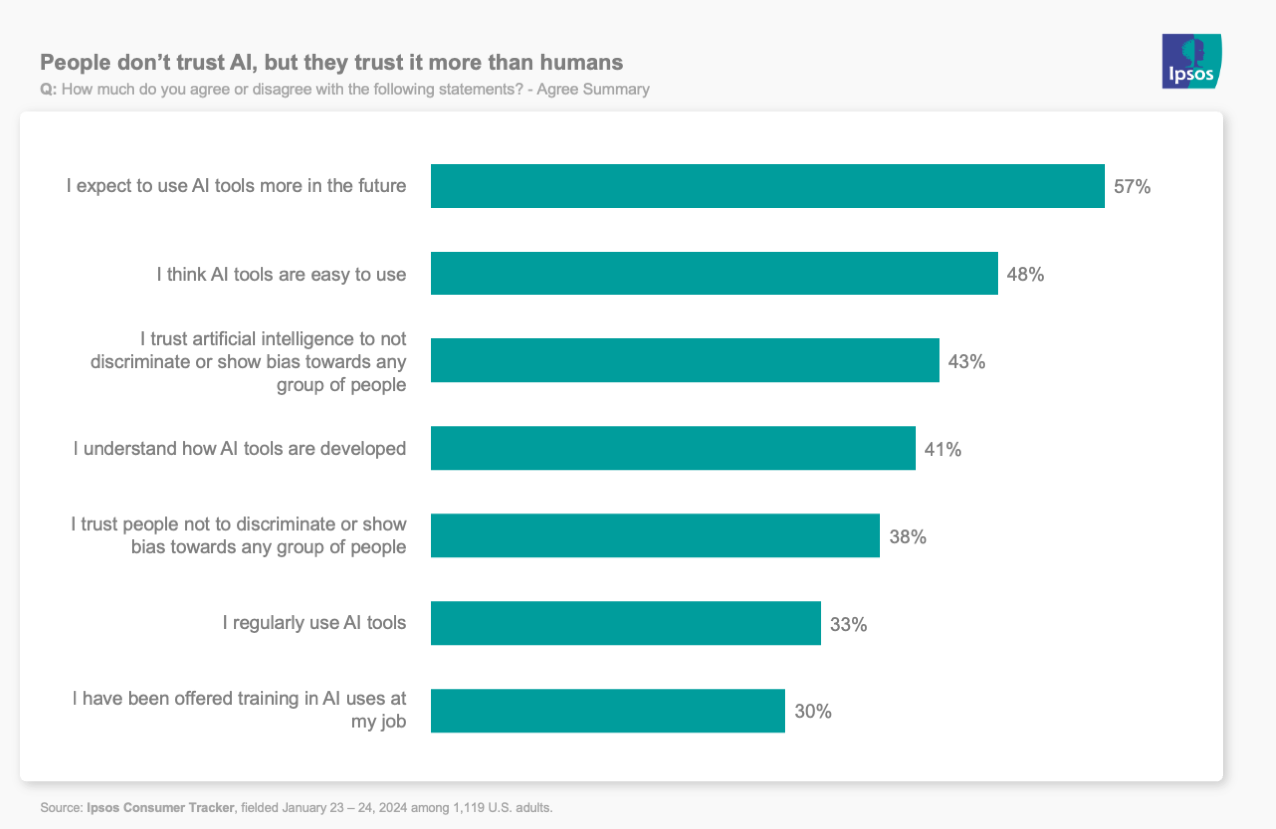

People don’t trust AI, but they trust it more than humans

Why we asked: Because AI is already going to be one of the stories of the year (not-so-bold prediction, even in January).

What we found: Only 43% of people trust AI tools not to discriminate or show bias towards any group of people. Which doesn’t seem great, and there are plenty of known issues with this already that have been well-documented. HOWEVER, they actually trust AI slightly more than they trust humans (only 38%) in this regard. About one in three people say they are regularly using some form of AI tool already and most (57%) expect to do more in the future. Just under half already find these tools easy to use. About one in three of those employed have been offered some sort of training in AI tools at work and four in ten think they understand how those AI tools are developed.

Apropos of nothing, I asked IpsosFacto, our proprietary generative AI environment, for a good example of one of my favorite cognitive biases. Here’s what it told me.

“The Dunning-Kruger effect is a cognitive bias where people with low ability at a task overestimate their ability. A good example might be someone who has just learned to play chess and, after winning a few games against friends, starts to believe they could compete at a high level, possibly even professional. However, their belief is based on a misunderstanding of their actual skill level and the complexity of the game. This person is not aware of the strategies and depth of understanding that professional chess players possess, thus they overestimate their own abilities.”

More insights from this wave of the Ipsos Consumer Tracker:

Gas prices are falling, but a majority of Americans haven't noticed